US researchers have developed a new "plug and play" brain-machine interface that includes machine learning. Their goal is to restore some autonomy to paralyzed patients.

Gradually, new solutions are appearing to give back some autonomy people with paralysis. For example, the National Institutes of Health (NIH) in the United States have bet on exoskeletons. The goal is to support children with cerebral palsy with the aim of relieving the disability.

However, direct neural interfaces (BCIs) are also full of promise. Indeed, these allow a direct communication between brain and machine. This type of technology is constantly developing, in particular through the use of tools such as machine learning . In a study published in the journal Nature Biotechnology on September 7, 2020, researchers at the UCSF Weill Institute of Neurosciences shared their advances.

According to Karunesh Ganguly, lead author of the study, the development of BCIs is undeniable. On the other hand, these systems must be recalibrated daily . Thus, they cannot take advantage of the brain's natural learning processes. The resilience of interfaces is therefore one of the most constraining obstacles.

According to the directors of the study, it is necessary to follow daily training in order to train the brain to communicate with the machine. Moreover, the machine is originally unable to assimilate this learning in order to improve.

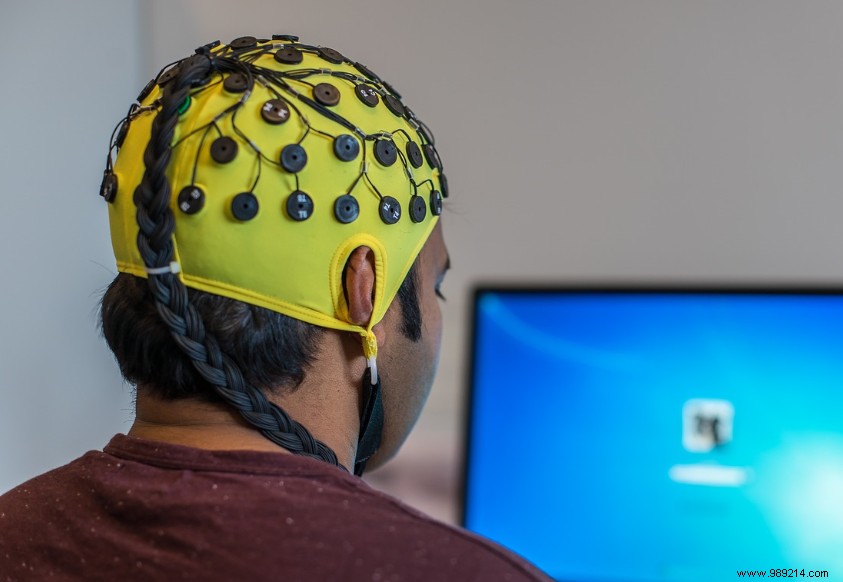

The plug and play interface that the researchers developed is intended to fix this problem. This is based on machine learning and intracranial electroencephalography (ECoG). ECoG involves placing electrodes on the surface of the brain of the patient in order to record his neural activity over the long term. Compared to electrodes placed directly in the gray matter, this solution is not as precise, but seems more stable.

The scientists therefore chose ECoG to conduct tests on quadriplegic patients. By imagining moving their arm and wrist, the patient sends a signal to the interface via ECoG. The latter translates this movement into a command and relays it to a computer with a screen on which there is a cursor. Thus, this information string allowed the patient to move the cursor on the screen.

By integrating machine learning, the researchers allowed the interface to become gradually familiar with patient controls. After several days of testing, the interface proved to be effective as the brain built a mental model in parallel to better interact with the machine. For researchers, this is akin to a collaboration between two learning systems. This research continues and the interface may eventually become a user extension , for example at the level of his hand or his arm.